With email marketing, one of the most effective strategies you can use is A/B testing. But what is A/B testing exactly? To put it simply, A/B testing is a method that involves contrasting two versions of the same component to figure out which one does better.

Email marketing commonly requires testing different subject lines, calls to action, or other important pieces to see which variation produces more clicks, conversions, or involvement from your subscribers.

What is Email Marketing?

Email marketing campaigns are vital for companies looking to connect with their customers and keep them up-to-date on their offerings. Split testing, also known as A/B testing, is a technique used in email marketing to compare two different versions of a message with the goal of determining which one yields the most positive results.

Specifically, A/B testing is a way to analyze and contrast two distinct versions of an email campaign to see which one has better performance. This testing can be done on a wide range of elements, such as the email’s subject line, layout, call-to-action, images, and content. The results of A/B testing are used to optimize individual campaigns to increase user engagement, click-through rates, and conversion rates.

By conducting A/B tests, businesses can uncover insights into what is driving participation among their email subscribers while also gaining a better grasp of how certain email campaign components resonate with their target audience.

Therefore, A/B testing is essential to constructing an effective email marketing strategy for any business that wants to maximize its impact and reach with customers.

The Purpose of A/B Testing

The main purpose of A/B testing is to increase the success of marketing campaigns by methodically testing different elements and finding the best combination. By testing multiple versions of the same email, marketers can uncover important insights into what connects with their target audience. This allows them to make data-informed decisions to enhance future campaigns.

Furthermore, A/B testing is applicable beyond just email marketing. This technique can be used for various digital marketing aspects, including website design, advertisement copy, and social media posts. The flexibility of A/B testing makes it a vital tool for any marketer aiming to stay competitive and achieve meaningful business results.

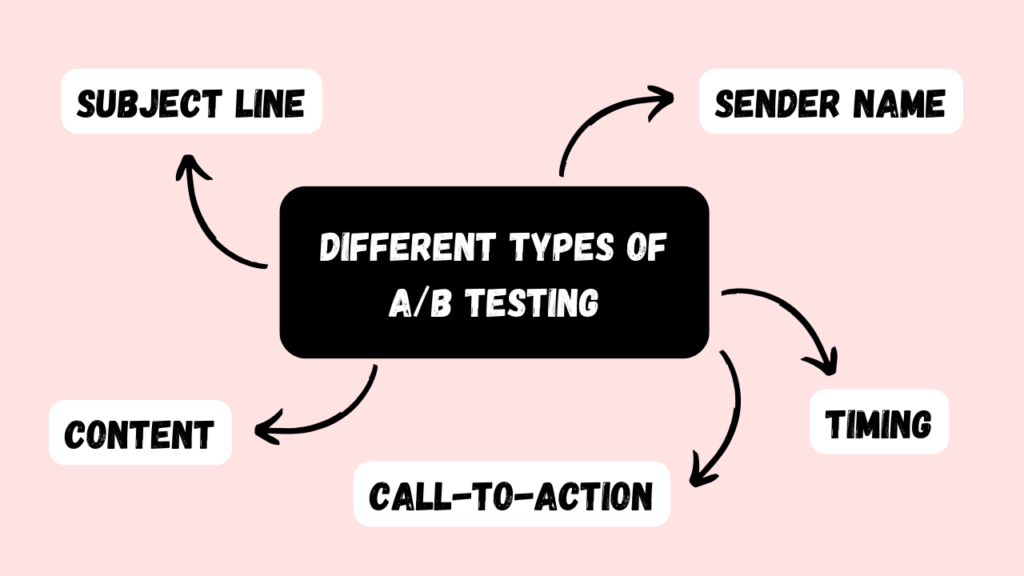

Different Types of A/B Testing

Subject Line

The subject line is a critical part of any email marketing campaign, as it’s the first thing subscribers see and can determine the success of the campaign. A/B testing allows you to test two different subject lines against each other to find out which performs better.

There are many ways to vary subject lines for testing, such as using different lengths, tones, emojis/punctuation, or approaches to the offer.

Content

Testing different content is an important A/B testing technique. The content subsection focuses on this area to explore what types of content can be tested. One is the email subject line, which is the first thing recipients see and impacts open rates. Another is the email copy itself, as variations can improve engagement and conversions.

Images are another content type that can be A/B tested by placement, size, and type. Dynamic content personalized based on recipient behavior is also testable.

Finally, the call-to-action (CTA) is a key content element that can be tested by changing the text, color, placement, or size to optimize conversions. Overall, testing different content elements can optimize engagement and performance.

Call-to-Action

A call-to-action (CTA) prompts potential customers to take a specific action like subscribing, purchasing, or filling out a form. CTAs in emails increase engagement and conversions. A/B testing CTAs determines which one is more effective by testing elements like color, placement, and wording.

For example, test a blue vs. green button, end vs. body placement, or “Buy Now” vs. “Learn More.” Comparing performance shows which CTA version positively impacts the outcome to optimize future campaigns.

Sender Name

An important A/B test is different sender names, using a person’s name or company name. The sender name is one of the first things recipients see, impacting open and engagement rates. A recognizable, trustworthy name can increase opens.

Timing

Email timing is a significant A/B test, as knowing the best send time increases success. Testing the same content at different times/days determines when recipients are most likely to open and read. Factors like industry, audience, and location influence ideal timing.

Regularly testing timing adapts to consumer behavior changes to ensure optimal timing for maximum engagement.

Tips for running more effective A/B tests

Email marketing platforms like Campaign Monitor make A/B testing easy with their drag-and-drop builders. You can quickly test variations by making edits and sending. However, before running A/B tests, use these strategic tips to increase your chances of success.

Have a hypothesis

To maximize your chance of increasing conversions, hypothesize why one variation might outperform another. Develop a basic hypothesis before you start. For example:

- “Personalizing the subject line with subscribers’ names could make our emails stand out, increasing open rates.”

- “Using a button instead of text for the CTA could draw attention and get more clicks.”

These statements define your test and goals. They keep your focus on impactful things.

Prioritize your tests

With many test ideas, prioritize ones likely to have the biggest impact and be easiest to implement. Use the ICE score:

- Impact: How big of an effect might this test have?

- Confidence: How sure are you it will work?

- Ease: How easy is it to set up?

Score each idea on these factors to prioritize high-impact, high-confidence, easy tests first.

Build on learnings

Not every test will increase conversions. But learn from each one to optimize future campaigns.

SitePoint’s “Versioning” newsletter A/B tested design elements for a week. Some decreased conversions, but they learned what works for their audience. This ultimately led to a 32% conversion increase.

Optimal Methods for A/B Testing

Test a Single Element at Once

Email marketing is crucial for companies to connect with customers. However, standing out amidst the deluge of emails people receive can be challenging. This is where A/B testing comes in handy.

One of the best practices for A/B testing is changing just one component of the email at a time. This means when running an A/B test, businesses should only alter one variable and keep everything else the same. For example, if a firm wants to test an email’s headline, they should only modify that element and leave the rest of the email unchanged.

Testing one feature at once allows for accurate results by understanding which specific changes drive desired outcomes. Changing multiple things simultaneously prevents determining which one caused the impact. Additionally, testing one email component at once is a more efficient approach as it enables completing tests faster.

Moreover, making changes to email campaigns this way ensures they are data-driven rather than speculative.

In summary, testing one element at a time is a key best practice for A/B testing, and using this method can help companies achieve superior results from email marketing efforts.

Evaluate a Sufficiently Large Sample

A critical aspect of effective A/B testing is assessing a large enough sample to ensure statistical significance. Statistical significance is vital for guaranteeing results accurately reflect the population’s behavior. One key reason to have an adequate sample is reducing false positives.

False positives happen when there appears to be a significant difference between the test and control groups but is actually just by chance. Having enough samples minimizes false positives by ensuring observed differences between groups are not random but actual responses to the change.

It’s also important to consider the significance level required for A/B testing – the chance of a false positive, often shown as a percentage. The standard is usually 95%, meaning a 5% or less probability the results occurred randomly. However, depending on the test, a lower significance level may be acceptable.

Another factor with sample size is the effect size – the difference’s magnitude between the groups. The larger the effect, the smaller the sample needed for significance. But for a subtle effect, more samples are required to detect a difference.

Define a Clear Objective

A well-defined goal is essential for a successful A/B testing approach. Without a clear aim, accurately measuring success is difficult. Therefore, establish an objective aligned with the overall marketing strategy. Consider what you want to accomplish from the test – higher open, click-through or conversion rates? Or find the best subject line or send time? Whatever it is, ensure it supports overarching goals.

Test Frequently

Email marketing constantly evolves, so regular A/B testing is crucial to stay ahead. Testing allows experimenting with email components like subject lines, design and CTAs to determine best practices for engagement and conversions.

However, testing is not a one-time event. Consistent testing and optimization is required to keep pace with changing consumer behavior and industry trends. Frequent testing helps ensure relevance, personalization and email effectiveness. Analyzing test results provides insights to inform future campaigns and boost performance. Thus, making routine testing essential.

Read Also: Which Of The Following Metric Is Used For Tracking The Status Of Email Marketing?

Common pitfalls to steer clear of when A/B testing email marketing campaigns

A/B testing is an efficient tactic for enhancing email marketing campaigns, but you must be cognizant of prevalent blunders that can produce inaccurate data or wasted efforts. Here are a few common traps to circumvent when A/B testing email marketing:

- Examining too many factors simultaneously: Testing excessive variables concurrently can impede pinpointing which one impacted performance. Instead, concentrate on examining one factor at a time.

- Too small of a sample size: If your sample size is minuscule, the statistics may be insignificant. Ensure an adequate sample size to guarantee meaningful outcomes.

- Absent explicit goals and aims: Without well-defined goals and objectives for your A/B test, you may be unsure of what you want to accomplish or how to quantify success.

- Testing for too brief of a duration: If you don’t test variations long enough, results may be unreliable. It’s vital to test for an adequate timeframe to obtain a representative sample.

- Omitting a control group: A control group is imperative for gauging the A/B test’s impact. Without one, accurately measuring the test’s effect is impossible.

- Disregarding the results: It’s essential to analyze the A/B test’s results and leverage the insights to refine email marketing campaigns. Ignoring the results hinders data-driven decisions to boost campaigns.

By skirting these common pitfalls, you can execute effective A/B tests to enhance email marketing campaigns and achieve superior outcomes for your business.

Conclusion

Email marketing is an effective tool for businesses of all sizes to connect with customers. One way to boost engagement is by A/B testing email campaigns. A/B testing involves sending two versions of an email to see which performs better.

The goal is to make data-driven decisions by testing individual email components like subject lines, images, calls-to-action, etc. This allows businesses to find out what drives open and click rates. They can then optimize campaigns accordingly to increase conversions. Marketers should thoughtfully plan and run A/B tests, tracking metrics to measure success.

By continuously improving email campaigns through testing, businesses can achieve higher engagement, revenue growth, and stronger customer relationships.